February 24, 2022

A Vercel-like PaaS beyond Jamstack with Kubernetes and GitOps, part II

Gitlab pipeline and CI/CD configuration

This article is the second part of the A Vercel-like PaaS beyond Jamstack with Kubernetes and GitOps series.

A Vercel-like PaaS beyond Jamstack with Kubernetes and GitOps

- Introduction: Some reasons to build a PaaS with Kubernetes and GitOps practices

- Part I: Cluster setup

- Part II: Gitlab pipeline and CI/CD configuration

- Part III: Applications and the Dockerfile

- Part IV: Kubernetes manifests

- Caveats and improvements to a PaaS built with Kubernetes and GitOps practices

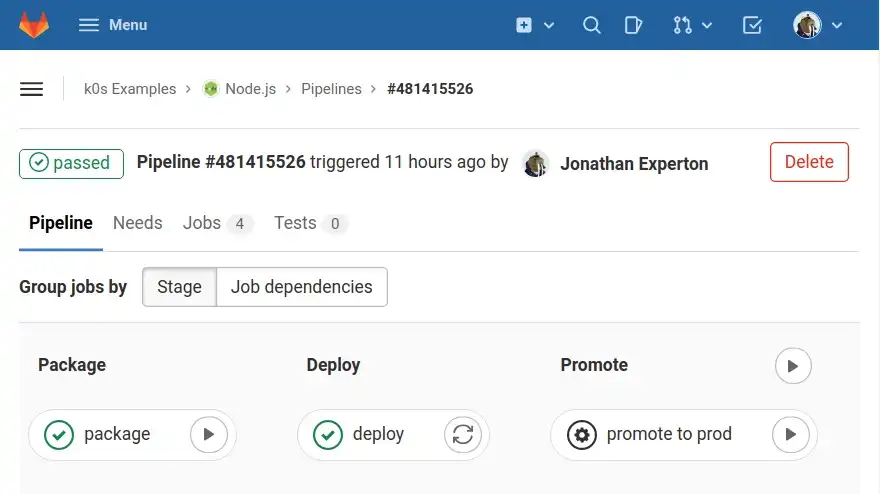

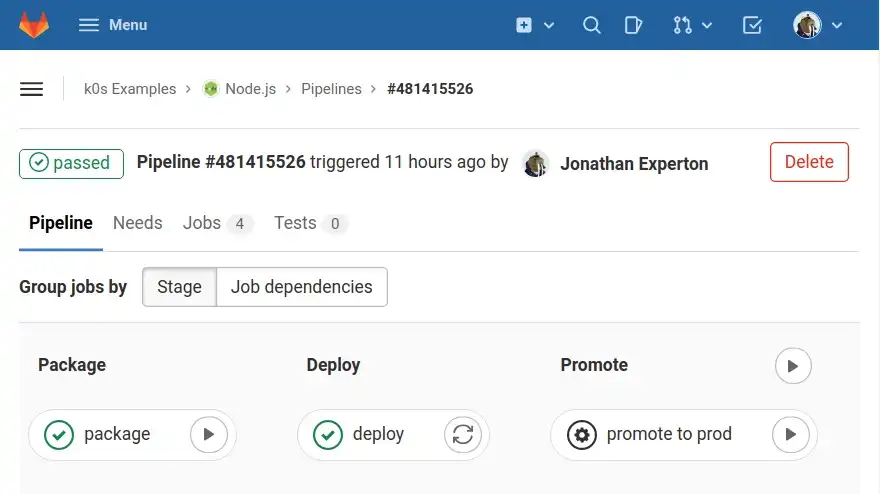

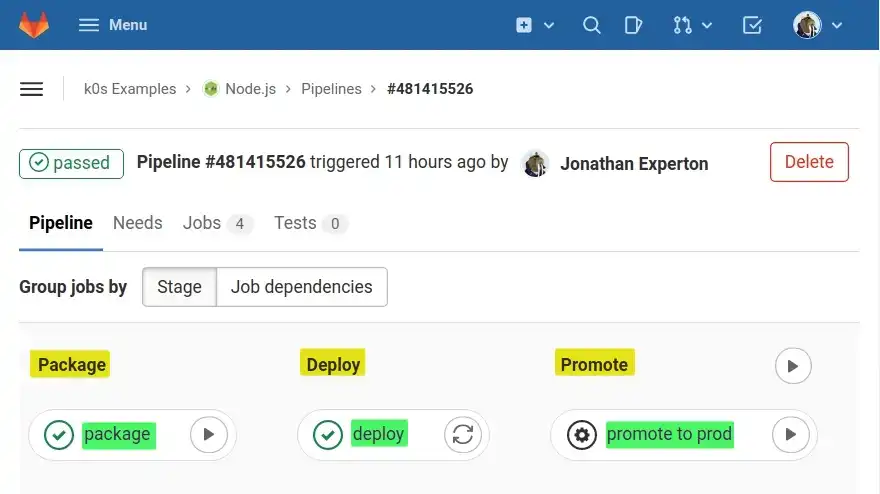

After installing a k0s Kubernetes cluster in part I, I'm now going to set up repositories and CI/CD configuration. Then, I'll set up a pipeline to build and deploy applications to Kubernetes. This is what it looks like when completed:

A Vercel-like pipeline done with GitLab

I'm using GitLab here mostly because I've been using it for 10 years and it's the platform I know the most. In my opinion, it has always been the best platform of its market. However, the competition has closed the gap in the last years and picking one platform instead of another is mostly about preferences or partnership.

I've already set up the same workflow with Azure DevOps in the past so I'm confident it could be set up with many other CI/CD platforms. I'm not using GitLab's Kubernetes integration features here.

In the future, I might use Dagger to implement cross-platform CI workflow.

- Introduction

- Grant GitLab CI/CD access to the Kubernetes cluster

- Grant Kubernetes access to the image registry

- Add pipeline configuration file and stage declaration

- The package stage configuration

- The deploy stage configuration

- The promote stage configuration

- The delete stage configuration

- Next step

Introduction

For the purpose of this experiment, I've created Node.js, PHP, Python and Ruby web applications. These are the applications I'll deploy to the Kubernetes cluster. They all have the same two endpoints:

/versionreturns the commit short hash of the built code, such as 7c77eb36/returns a random name such as distracted_clarke

To generate names, I've copied the code from Docker-CE that generate Docker container names and translated it to the appropriate language.

I also added runtime information in a HTTP header:

$ curl -i https://nodejs.k0s.gaudi.sh | grep envenv: node-16.13.2

For the simplicity of the experiment, I've kept these apps very basic. One might think a higher complexity in the code could impact the reliability of the setup. I'm confident it does not.

I've been running much more complex Next.js and NextJS apps, some with an in-memory MongoDB servers, on AKS using a similar setup. For about two years now, it has been reliable as long as allocated hardware resources follows the cluster workload.

1. Grant GitLab CI/CD access to the Kubernetes cluster

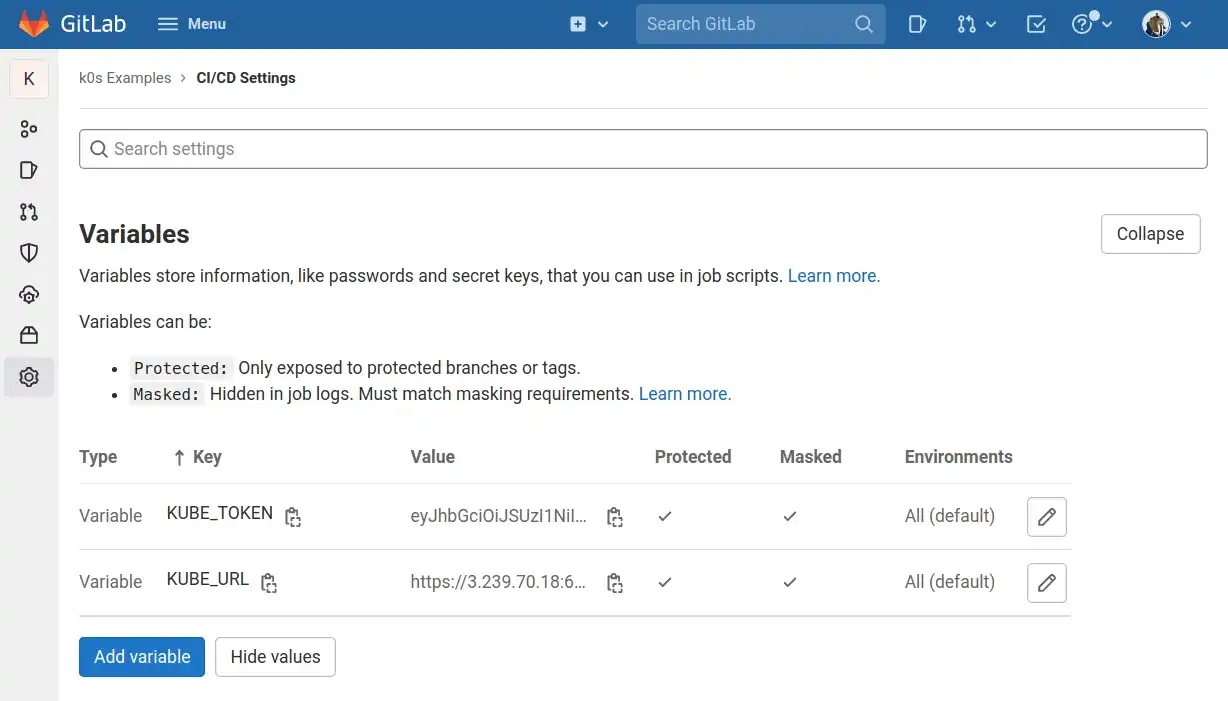

The first thing I want to do is allow GitLab to query the Kubernetes cluster from pipeline jobs. To do so, I need to provide GitLab with the cluster's API URL, and a token to perform requests as an authorized user. I'll store these values in GitLab's CI/CD variables.

I've added every application repository to the k0s-examples group so that they can share the same inherited group CI/CD variables. Then, I'll only have to update the group variables when I recreate a fresh Kubernetes cluster.

Variables must be added in the Settings > CI/CD > Variables section of the group.

Once values are added and revealed, the section looks like this:

GitLab's CI/CD variables interface

These variables will be used to configure kubectl at the deploy stage.

The KUBE_URL variable

The cluster API URL can be retrieved from the k0s server terminal I've opened in part I:

$ sudo k0s kubeconfig admin \| sed 's/'$(ip r | grep default | awk '{ print $9}')'/'$(curl -s ifconfig.me)'/g' \| grep 'server: https' \| awk '{ print $2 }'WARN[2022-02-07 22:51:36] no config file given, using defaultshttps://3.89.90.202:6443

The KUBE_TOKEN variable

I create an admin user for GitLab from the k0s server and copy paste its authentication token from the terminal to GitLab:

# first create gitlab user$ sudo k0s kubectl create serviceaccount gitlab -n kube-system$ sudo k0s kubectl create clusterrolebinding gitlab \--clusterrole=cluster-admin \--serviceaccount=kube-system:gitlab# then print the token value$ sudo k0s kubectl get secrets \-n kube-system \-o jsonpath="{.items[?(@.metadata.annotations['kubernetes\.io/service-account\.name']=='gitlab')].data.token}" \| base64 --decodeeyJhbGciOiJSUzI1NiIsImtpZCI6IkpNa2lUUTVLTVJiVExDcUY5Y0JEaDY2MEZNT3NEM1Z...

2. Grant Kubernetes access to the image registry

Once GitLab can query the cluster, I have to allow GitLab to push images into a registry and to allow Kubernetes to pull those images.

GitLab automatically provides an image registry for each repository, called Container Registry, where I'll push Docker images built at the package stage of the GitLab pipeline and then pull them from the Kubernetes cluster at the deploy stage.

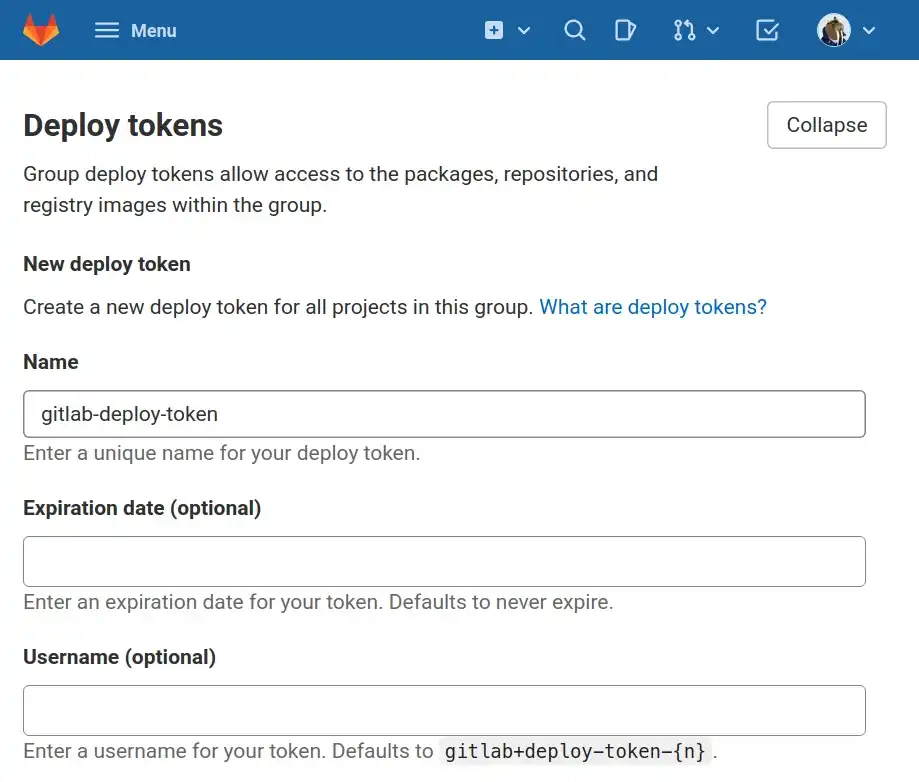

From Settings > Repository > Deploy token I create a deploy token named gitlab-deploy-token

(check documentation

to know why this name) and grant read_registry permission only:

Adding a deploy token to GitLab

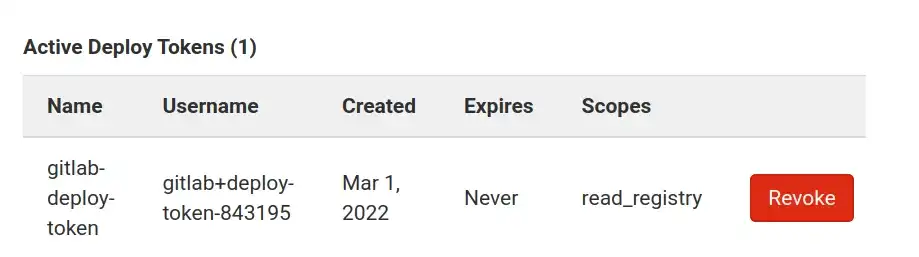

Once created, the token is displayed in the active deploy token list:

Active deploy token list

This token will populate the value of CI_DEPLOY_USER and CI_DEPLOY_PASSWORD

variables in pipelines, which are required to log in to the GitLab image registry

and are passed to Kubernetes to allow images to be pulled from the cluster at

the deploy stage.

3. Add pipeline configuration file and stage declaration

GitLab is set up, now I can create the pipeline configuration and use variables I've added in the two previous sections

The pipeline I'm creating consists of four stages:

- Package the application in Docker image.

- Deploy this image to a Kubernetes cluster and generate a unique URL to access this application instance, such as https://7c77eb36.nodejs.k0s.gaudi.sh

- Promote the instance to production by pointing a non-prefixed URL to it, such as https://nodejs.k0s.gaudi.sh

- Remove the application instance.

This is what it looks like in GitLab:

A Vercel-like pipeline done with GitLab

In the root folder of each repository, I create a .gitlab-ci.yml

file that contains the configuration of the GitLab pipeline.

I add the service to use the Docker-in-docker strategy in pipeline jobs:

# the base image that runs the pipelineimage: docker:19.03.13# docker service to run docker-in-dockerservices:- docker:19.03.13-dind

Then the stages that will run sequentially:

stages:- package- deploy- promote- delete

I've highlighted the different stages and jobs in the screenshot below to illustrate how each stage will contain a unique job and will run one after another, the delete stage is not visible here:

A pipeline is made of stages and jobs

4. The package stage configuration

Before deploying to the cluster, I have to package the application in a Docker image and push it to the Container Registry.

Since each step has only one job, I use the same stage name and job name. The excerpt below shows the configuration of the package job:

package: # job namestage: package # attach this "package" job to the "package" stagewhen: manual # remove this to deploy automaticallyimage: docker:19.03.13-dind # use docker-in-dockerscript: |# login to the image registryecho ${CI_REGISTRY_PASSWORD} \| docker login -u ${CI_REGISTRY_USER} ${CI_REGISTRY} --password-stdin# build docker imagedocker build -t ${CI_REGISTRY_IMAGE}:${CI_COMMIT_SHORT_SHA} \--build-arg COMMIT_SHORT_HASH=${CI_COMMIT_SHORT_SHA} .# push docker imagedocker push ${CI_REGISTRY_IMAGE}:${CI_COMMIT_SHORT_SHA}

I'm not using the CI_DEPLOY_USER and CI_DEPLOY_PASSWORD values I created in

the previous section. Instead, I use CI_REGISTRY_USER and the CI_REGISTRY_PASSWORD

short living value that is allowed to write to the registry:

The password to push containers to the project's GitLab Container Registry. Only available if the Container Registry is enabled for the project. This password value is the same as the

CI_JOB_TOKENand is valid only as long as the job is running. Use theCI_DEPLOY_PASSWORDfor long-lived access to the registry. GitLab documentation

I use the git commit short hash to tag the built image with CI_COMMIT_SHORT_SHA.

I also pass this value to the Docker build command as an argument to store it

in the Docker image and return it with the /version application endpoint.

5. The deploy stage configuration

The Docker image has been pushed, now I can trigger a Kubernetes deployment using

kubectl and manifest files stored in the repository.

This job starts automatically when the package stage completes. It doesn't

require Docker-in-docker. Instead, I use an image provided by Bitnami that

contains kubectl:

deploy:stage: deployneeds: [package] # needs "package" stage completion before runningimage:name: bitnami/kubectl:latestentrypoint: ['']script: |# declare Kubernetes namespace to useexport KUBE_NAMESPACE=${CI_PROJECT_NAME}-${CI_COMMIT_SHORT_SHA}# declare the public URL of the deploymentexport KUBE_INGRESS_HOST=${CI_COMMIT_SHORT_SHA}.${CI_PROJECT_NAME}.k0s.gaudi.sh# add KUBE_URL, KUBE_TOKEN and KUBE_NAMESPACE to kubectl./sh/configure-kubectl.sh# inject values in manifests and deploy./sh/deploy.sh

The KUBE_NAMESPACE refers to the Kubernetes namespace where I want to place

any resource created by kubectl during the job execution. This way I can group

components together and makes removing them easier at the delete stage.

The KUBE_INGRESS_HOST variable refers to the public URL I want to use to route

network traffic to the application instance:

$ curl https://7c77eb36.nodejs.k0s.gaudi.shvigorous_cray

Once variables are declared, the first thing I want to do is to configure kubectl

with the KUBE_URL and KUBE_TOKEN values I've added in section 1.

The configure-kubectl.sh script

The script runs inside the

bitnami/kubectl image and calls the configure-kubectl.sh

script first

All actions performed with kubectl will be done under this namespace:

#!/bin/shkubectl config set-cluster k0s --server=${KUBE_URL} --insecure-skip-tls-verify=truekubectl config set-credentials gitlab --token=${KUBE_TOKEN}kubectl config set-context ci --cluster=k0skubectl config set-context ci --user=gitlabkubectl config set-context ci --namespace=${KUBE_NAMESPACE}kubectl config use-context ci

The deploy.sh script

The deploy.sh

file contains 3 subtasks:

It calls

kubectlto store the deploy token in a Kubernetes Secret to allow Docker images to be pulled from the GitLab Container Registry by the Kubernetes Deployment later on.It replaces strings such as

__CI_REGISTRY_IMAGE__with the actual value of theCI_REGISTRY_IMAGEvariable in yaml manifest files. I use this solution to make up for the lack of a template engine in this setup.

I do a simple find and replace, using sed and "|" instead of "/" because CI_REGISTRY_IMAGE

contains "/" characters.

- It calls

kubectlto deploy the app to the cluster.

...# 1. add credentials to pull image from gitlabkubectl create secret docker-registry gitlab-registry \--docker-server="${CI_REGISTRY}" \--docker-username="${CI_DEPLOY_USER}" \--docker-password="${CI_DEPLOY_PASSWORD}" \--docker-email="${GITLAB_USER_EMAIL}" \-o yaml --dry-run=client | kubectl apply -f -# 2. replace __tokens__ in yaml files with real valuesfind manifests -type f -exec \sed -i -e 's|__CI_REGISTRY_IMAGE__|'${CI_REGISTRY_IMAGE}'|g' {} \;find manifests -type f -exec \sed -i -e 's|__CI_COMMIT_SHORT_SHA__|'${CI_COMMIT_SHORT_SHA}'|g' {} \;...# 3. deploy app to clusterkubectl apply -f manifests/...

6. The promote stage

Finally, given an existing deployment such as 7c77eb36.nodejs.k0s.gaudi.sh, I want to point the production domain nodejs.k0s.gaudi.sh to the same deployment.

This stage creates a new Kubernetes Ingress to route the production domain to the same container I've deployed in the previous section:

promote to prod:when: manualneeds: [deploy]image:name: bitnami/kubectl:latestentrypoint: ['']stage: promotescript: |export KUBE_NAMESPACE=${CI_PROJECT_NAME}-${CI_COMMIT_SHORT_SHA}export KUBE_INGRESS_HOST=${CI_PROJECT_NAME}.k0s.gaudi.sh./sh/configure-kubectl.sh./sh/promote.sh

Like in the previous deploy job, kubectl is configured with the configure-kubectl.sh script.

Then, the promote.sh is executed:

...# delete existing ingresskubectl delete ingress \--all-namespaces \--field-selector metadata.name=api-prod \-l app=${CI_PROJECT_NAME},tier=backend# add new ingresscat <<EOF | kubectl apply -f -apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: api-prodlabels:app: ${CI_PROJECT_NAME}tier: backend...rules:- host: ${KUBE_INGRESS_HOST}...

Now both URLs return the same version value since they point to the same deployment:

$ curl https://7c77eb36.nodejs.k0s.gaudi.sh/version7c77eb36$ curl https://nodejs.k0s.gaudi.sh/version7c77eb36

7. The delete stage

To clean up deployments, I'm adding a fourth stage to delete Kubernetes resources. Docker images are not removed from the Container Registry, though.

To remove all resources attached to the deployment, I simply delete the Kubernetes namespace:

delete env:when: manualneeds: [deploy]image:name: bitnami/kubectl:latestentrypoint: ['']stage: deletescript: |export KUBE_NAMESPACE=${CI_PROJECT_NAME}-${CI_COMMIT_SHORT_SHA}./sh/configure-kubectl.shkubectl delete namespace $KUBE_NAMESPACE

Next step

All stages of the pipeline are set up, now I must add two things to make it work:

the

Dockerfileto build the Docker image in the package stage.Kubernetes manifests I'm applying in the

deploy.shwith thekubectl apply -f manifests/command in the deploy stage.